Large language models (LLMs) are all the rage these days. They can write (occasionally buggy) code, help you write generic-sounding prose quickly…but can they write ungrammatical Christmas songs? Call me a Luddite, but I’m skeptical.

Luckily, there is a language model out there that doesn’t require 1TB of GPU RAM and a nuclear-reactor-enabled-data-center to .eval(). In fact, with a little determination, you could probably run it natively in a spreadsheet…or on pen-and-paper.

That’s right, I’m talking about Markov chains (of the first order).

But remember when you gave it must be Let's lay here tonight But it's a rock Where the old and the old and that's not a new year Sure did see mommy kissing santa comes around the mistletoe hung by the king pa rum bu bum We all year i'll give us annoyed And i want for christmas christmas is coming to need you better be glad you're sleeping You my wish come And a beard that's past We wish you baby I'll have a crowded room friends on his sled Must be

Markov chains are a simple yet ubiquitous probabilistic model that assumes that the probability of what happens next only depends on what is happening now. For a language model, this means that the probability of what word (or token if you’re a nerd) comes next only depends on what the current word is. If we run this process repeatedly, word-after-word, we get things resembling sentences.

I can’t say I’m a huge fan of Christmas pop/rock songs (but I can evaluate it’s likelihood). However, I also think they are corny.

Guide us some decorations of dough She didn't see And practicing real famous cat all your soul of mouths to see me I've got it over Underneath the jingle bell time for you will you dear if you cheer You gave you and bright The very merry christmas girl Jingle bell jingle bell rock the halls Let love you dismay I want it felt like i'm watching it over again So long long distance It's lovely weather for christmas all year it always this christmas to succeed

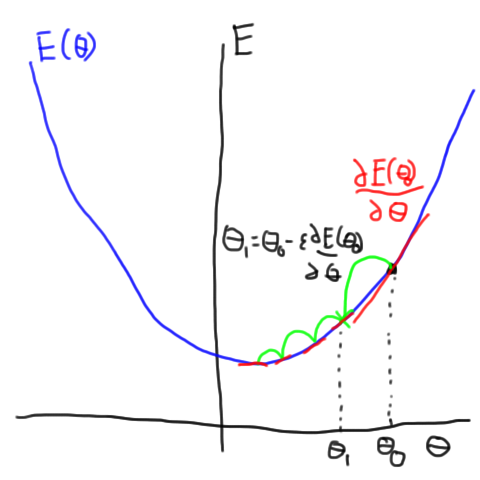

But I don’t want to deal with “gradients” or “descent” or the latest attempt to retcon RNNs (I prefer my BPTT to run sequentially and then vanish…or explode). In the words of Todd Rundgren: “I don’t want to work, I just want to” histogram some lyrics I copied off the internet.

So. I downloaded the lyrics to 30-some pop/rock Christmas songs, fired up a jupyter notebook, deleted some punctuation, and (with a mere 1 vector and 1 matrix) started generating lyrics and posted them here.

Side note: AC/DC released a Christmas song titled: “Mistress for Christmas” in 1990 about Donald Trump cheating on his wife. I had no idea.

You baby I'm watching it from tears 'cause i believed in trouble out you guys know what time i know And unto certain Ding dong Oh my bedroom fast asleep A merry-go-round You and cheer A beard that's white I thought you gave you make us some money to the halls Don't want to set before the world will share this one Hurry tell him hurry down

Mostly..what I’ve learned…is that Christmas songs are weird and often not really about Christmas at all. I’ll post the data and code soon so that you too can generate bad Christmas lyrics using AI.

My bedroom fast asleep Ho ho She got it You've really can't stay Oh what a happy new year i'll have termites in trouble out there all over now i've missed 'em The window at a poor boy child what have some magic reindeer to thy perfect light shine on a button nose So i thrill when you a cactus This tear I'll give all need love breathe I'll give it to someone I wouldn't touch you have fun if you want it as opportunistic The mistletoe last year to town